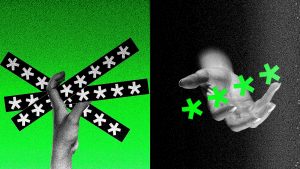

Microsoft’s AI Can Be Turned Into an Automated Phishing Machine

2 min readMicrosoft’s AI Can Be Turned Into an Automated Phishing Machine

Microsoft’s AI technology has the potential to be used in a harmful way if it falls into the wrong hands....

Microsoft’s AI Can Be Turned Into an Automated Phishing Machine

Microsoft’s AI technology has the potential to be used in a harmful way if it falls into the wrong hands. Researchers have found that AI models, such as GPT-3, can be manipulated to generate convincing phishing emails that can trick unsuspecting individuals into giving up their personal information.

Phishing attacks have been on the rise in recent years, and with the help of AI, cybercriminals can create more sophisticated and targeted scams. By feeding the AI model with information about a potential victim, such as their interests, job title, or recent online activity, the generated phishing emails can be tailored to be highly convincing.

This automated phishing machine poses a significant threat to individuals and organizations alike. It can lead to identity theft, financial loss, and even reputational damage if sensitive information is compromised. It is crucial for individuals to be cautious when receiving unsolicited emails and to verify the legitimacy of any requests for personal information.

Microsoft and other tech companies are working on ways to combat the misuse of AI for malicious purposes. They are developing algorithms and tools to detect and prevent phishing attacks, as well as educating users on how to spot and report suspicious emails.

It’s important for individuals and businesses to stay informed about the risks of AI-powered phishing attacks and to take necessary precautions to protect their data. By being vigilant and proactive, we can help mitigate the threat of automated phishing machines and safeguard our personal information.